ora2pg. Миграция базы данных Oracle на Pangolin

Утилита ora2pg отсутствует в продукте в версии 7.1.0.

Версия: 23.0.

В исходном дистрибутиве установлено по умолчанию: нет.

Связанные компоненты:

Для установки необходимых компонентов требуются права

sudo all.Компоненты, поставляемые в дистрибутиве продукта (каталог

migration_tools/ora2pg):

- ora2pg (ora2pg-23.0.tar.tar.gz);

- DBD::Oracle (DBD-Oracle-1.80.tar.gz);

- DBD:Pg (DBD-Pg-3.15.0.tar.gz).

- DBD-Oracle.spec

- DBD-Pg.spec

- ora2pg.spec

Компоненты RPM для Oracle Client:

- oracle-instantclient18.5-basic-18.5.0.0.0-3.x86_64.rpm;

- oracle-instantclient18.5-jdbc-18.5.0.0.0-3.x86_64.rpm;

- oracle-instantclient18.5-sqlplus-18.5.0.0.0-3.x86_64.rpm;

- oracle-instantclient18.5-devel-18.5.0.0.0-3.x86_64.rpm.

Дополнительные компоненты:

- cpan;

- libaio1;

- libaio-devel;

- readline-devel.x86_64;

- perl-core;

- perl-devel;

- perl-version;

- perl-DBI.x86_64;

- perl-Test-Simple;

- libpq-devel

- postgresql-devel.

Схема размещения: не используется.

Ora2pg является opensource утилитой для миграции данных из Oracle в Pangolin.

Утилита

ora2pgявляется одним из возможных вариантов и инструментов миграции. Окончательный выбор инструмента и его использование находится в зоне ответственности продуктовой команды, которая планирует замену используемой СУБД с Oracle на Pangolin.

Утилита Ora2Pg состоит из:

- скрипта Perl (

ora2pg); - модуля Perl (

Ora2Pg.pm).

Для работы утилиты нужно:

- скорректировать файл конфигурации

ora2pg.conf– установить DSN для базы данных Oracle; - установить нужный тип экспорта:

- таблица с ограничениями (TABLE with constraints);

- представление (VIEW);

- материализованное представление (MVIEW);

- табличное пространство (TABLESPACE);

- последовательность (SEQUENCE);

- индекс (INDEX);

- триггер (TRIGGER);

- предоставление (GRANT);

- функция (FUNCTION);

- процедура (PROCEDURE);

- пакет (PACKAGE);

- раздел (PARTITION);

- тип (TYPE);

- вставить/скопировать (INSERT or COPY);

- внешние данные (FDW);

- запрос (QUERY);

- инструмент ETL(KETTLE);

- синоним (SYNONYM).

Возможности утилиты Ora2Pg:

- по умолчанию

Ora2Pgэкспортирует в файл, который можно загрузить в базу данных Pangolin с помощью клиента psql; - импорт непосредственно в целевую базу данных PostgreSQL путем установки ее DSN в файле конфигурации.

Включенные функции:

- экспорт полной схемы базы данных (таблицы, представления, последовательности, индексы) с уникальным, первичным, внешним ключом и ограничениями проверки;

- экспорт разрешений/привилегий для пользователей и групп;

- экспорт разделов диапазона/списка и вложенных разделов;

- экспорт выбранной таблицы;

- экспорт схемы Oracle в схему PostgreSQL;

- экспорт предопределенных функций, триггеров, процедур, пакетов;

- экспорт полных данных или с предложением WHERE;

- полная поддержка Oracle BLOB-объекта как PostgreSQL BYTEA;

- экспорт представлений Oracle в виде таблиц PostgreSQL;

- экспорт пользовательских типов Oracle;

- обеспечение базового автоматического преобразования кода PLSQL в PLPGSQL;

- экспорт таблицы Oracle как внешней таблицы – оболочки данных (FDW, Foreign Data Wrapper);

- экспорт материализованного представления;

- вывод отчета о содержимом базы данных Oracle;

- оценка стоимости миграции базы данных Oracle;

- оценка уровня сложности миграции базы данных Oracle;

- оценка стоимости переноса кода PL/SQL из файла;

- оценка стоимости переноса запросов Oracle SQL, хранящихся в файле;

- создание XML-файлов (.ktr) для использования с Penthalo Data Integrator (Kettle);

- экспорт локатора Oracle и пространственной геометрии в PostGIS;

- экспорт DBLINK как оболочки данных Oracle FDW;

- экспорт синонимов в виде представлений;

- экспорт каталога как внешней таблицы или каталога с расширением

external_file; - отправка списка SQL-инструкций через несколько подключений PostgreSQL;

- определение различий между базами данных Oracle и PostgreSQL для целей тестирования.

Доработка

Доработка не проводилась.

Данный компонент не входит в состав продукта, поэтому поставляется в виде бинарных файлов в составе дистрибутива в каталоге 3rdparty/migration_tools/.

Ограничения

Ограничения отсутствуют.

Установка

Oracle Client

В данном документе в качестве примера используется Oracle Client версии 18.5.

sudo yum -y install oracle-instantclient18.5-basic-18.5.0.0.0-3.x86_64.rpm

sudo yum -y install oracle-instantclient18.5-devel-18.5.0.0.0-3.x86_64.rpm

Проверка версии Perl - должна быть версии perl 5.10 и выше:

perl -v

Пример ответа:

This is perl 5, version 16, subversion 3 (v5.16.3) built for x86_64-linux-thread-multi

(with 44 registered patches, see perl -V for more detail)

Copyright 1987-2012, Larry Wall

Perl may be copied only under the terms of either the Artistic License or the

GNU General Public License, which may be found in the Perl 5 source kit.

Complete documentation for Perl, including FAQ lists, should be found on

this system using "man perl" or "perldoc perl". If you have access to the

Internet, point your browser at http://www.perl.org/, the Perl Home Page.

Драйвер Perl

Установите модуль для подключения приложений на Perl к различным типам баз данных (PostgreSQL, SQLite, MySQL, MSSQL, Oracle, Informix, Sybase, ODBC и другим):

sudo yum -y install perl-DBI.x86_64

Требуется версия 1.614 и выше. Пример результата установки и вывод версии:

Package perl-DBI-1.627-4.el7.x86_64 already installed and latest version

Драйвер Oracle

Для переноса базы данных Oracle необходимо установить модули DBD::Oracle

Установка DBD::Oracle.

Для ora2pg необходим модуль DBD::Oracle для подключения к БД Oracle из Perl DBI.

-

распаковать архив:

tar -xvf DBD-Oracle-1.80.tar.gz && cd DBD-Oracle-1.80 -

выполнить сборку и инсталляцию:

perl Makefile.PL -p

make

sudo make install

Программа инсталляции в завершении работы выдает напоминание о необходимости установки переменной ORACLE_HOME:

WARNING: Setting ORACLE_HOME env var to /usr/lib/oracle/18.5/client64 for you.

Переменные окружения Oracle

Выполните установку переменных окружения.

Возможно установка переменных в /etc/environment. В случае отсутствия прав на изменение /etc/environment, переменные можно установить для текущей сессии модификацией файла .bash_profile.

cd /usr/lib/oracle/18.5/client64/bin/

export PATH="$PATH:/usr/lib/oracle/18.5/client64/bin"

export ORACLE_HOME=/usr/lib/oracle/18.5/client64

export LD_LIBRARY_PATH="$LD_LIBRARY_PATH:/usr/lib/oracle/18.5/client64/lib"

или

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$ORACLE_HOME/lib:$ORACLE_HOME

Порядок установки переменной LD_LIBRARY_PATH описан в документе «Руководство администратора», раздел «Порядок установки переменной LD_LIBRARY_PATH в окружении Pangolin Manager».

Настройка c ldconfig:

sudo sh -c "echo /usr/lib/oracle/18.5/client64/lib > /etc/ld.so.conf.d/oracle-instantclient.conf"

sudo ldconfig

sudo mkdir -p /usr/lib/oracle/18.5/client64/lib/network/admin

Появление следующей ошибки информирует о некорректной установке переменных или ошибке в пути каталогов:

sqlplus: error while loading shared libraries: libsqlplus.so: cannot open shared object file: No such file or directory

Драйвер PostgreSQL

Для ora2pg необходим модуль DBD::Pg для подключения к БД Postgres из Perl DBI.

-

распаковать архив:

tar -xvf DBD-Pg-3.15.0.tar.gz && cd DBD-Pg-3.15.0 -

выполнить сборку:

perl Makefile.PL -pЕсли выдается вопрос:

Configuring DBD::Pg 3.15.0

Path to pg_config?выполнить команду:

export PATH=$PATH:/usr/pgsql-se-04/binВ случае, если на используемом сервере отсутствует экземпляр Pangolin, необходимо скопировать с другого рабочего сервера из каталога

$PGHOMEпапкиbin,libиinclude. Содержимое этих каталогов должно иметь права на чтение и запуск для пользователя, от имени которого выполняется установка и будет проводиться запуск утилиты.Пример расположения каталогов:

/usr/pgsql-se-04/bin/

/usr/pgsql-se-04/lib/

/usr/pgsql-se-04/include/Пример вывода успешного результата в консоль:

Configuring DBD::Pg 3.15.0

Enter a valid PostgreSQL postgres major version number 11

Enter a valid PostgreSQL postgres minor version number 7

Enter a valid PostgreSQL postgres patch version number 0

PostgreSQL version: 110700 (default port: 5432)

POSTGRES_HOME: (not set)

POSTGRES_INCLUDE: /usr/pgsql-se-04/include

POSTGRES_LIB: /usr/pgsql-se-04/lib

OS: linux

Checking if your kit is complete...

Looks good

Using DBI 1.627 (for perl 5.016003 on x86_64-linux-thread-multi) installed in /usr/lib64/perl5/vendor_perl/auto/DBI/

Writing Makefile for DBD::Pg

Writing MYMETA.yml and MYMETA.json -

выполнить инсталляцию:

sudo make

sudo make install

Возможные ошибки при установке:

-

Если не удалось определить версию PostgreSQL автоматически, будет выдано предложение:

Enter a valid PostgreSQL postgres major version number 11

Enter a valid PostgreSQL postgres minor version number 7

Enter a valid PostgreSQL postgres patch version number 0 -

В процессе установки драйвера PostgreSQL

DBD::Pgвозможно прерывание по ошибке:perl Makefile.PL -p && sudo make && sudo make install

Configuring DBD::Pg 3.15.0

Enter a valid PostgreSQL postgres major version number 11

Enter a valid PostgreSQL postgres minor version number 7

Enter a valid PostgreSQL postgres patch version number 0

PostgreSQL version: 110700 (default port: 5432)

POSTGRES_HOME: (not set)

POSTGRES_INCLUDE: /usr/pgsql-se-04/include

POSTGRES_LIB: /usr/pgsql-se-04/lib

OS: linux

The value of POSTGRES_INCLUDE points to a non-existent directory: /usr/pgsql-se-04/include

Cannot build unless the directories exist, exiting.

make: *** No targets specified and no makefile found. Stop.В этом случае необходимо сделать следующее:

export PATH="$PATH:$PGHOME/bin";- проверить наличие каталога

includeпо пути/usr/pgsql-se-04/.

-

Возможно появление следующей ошибки:

Pg.h:35:22: fatal error: libpq-fe.h: No such file or directoryВ этом случае необходимо установить модуль

postgresql-devel:yum install postgresql-devel

Для обеспечения возможности миграции с использованием аутентификации в базе данных Pangolin по паролю с использованием метода аутентификации SCRAM-SHA-256, необходимо использовать клиент PostgreSQL версии не ниже 10. В противном случае, возникнет исключительная ситуация вида:

DBI connect('dbname=name;host=server-name0001.ru;port=5433','nnn',...) failed: SCRAM authentication requires libpq version 10 or above at /usr/local/share/perl5/Ora2Pg.pm line 1854.

[2022-05-26 13:41:59] FATAL: 1 ... SCRAM authentication requires libpq version 10 or above

Aborting export...

Варианты решения данной проблемы:

-

Обновите версию библиотеки

libpq. -

Используйте метод аутентификации

md5. -

Удалите/переименуйте все символические ссылки /файлы

libpq.*в каталоге/usr/lib64/:cp /usr/pgsql-se-04/lib/libpq.so.5.11 /usr/lib64/

cp /usr/pgsql-se-04/lib/libfe_elog.so /usr/lib64/

cd /usr/lib64/

chmod 644 libpq.so.5.11

chmod 644 libfe_elog.so

ln libpq.so.5.11 libpq.so.5

ln libpq.so.5.11 libpq.so

Модуль ora2pg

-

распаковать архив:

tar xvf ora2pg-23.0.tar.gz && cd ora2pg-23.0 -

выполнить сборку:

perl Makefile.PLПример вывода успешного результата в консоль:

which: no bzip2 in (/usr/local/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/home/user/.local/bin:/home/user/bin:/usr/lib/oracle/18.5/client64/bin)

Checking if your kit is complete...

Looks good

Writing Makefile for Ora2Pg

Done...

------------------------------------------------------------------------------

Please read documentation at http://ora2pg.darold.net/ before asking for help

------------------------------------------------------------------------------

Now type: make && make install -

выполнить инсталляцию:

make

sudo make installПример вывода успешного результата в консоль:

Installing /usr/local/share/perl5/Ora2Pg.pm

Installing /usr/local/share/perl5/Ora2Pg/PLSQL.pm

Installing /usr/local/share/perl5/Ora2Pg/MySQL.pm

Installing /usr/local/share/perl5/Ora2Pg/GEOM.pm

Installing /usr/local/share/man/man3/ora2pg.3

Installing /usr/local/bin/ora2pg_scanner

Installing /usr/local/bin/ora2pg

Installing default configuration file (ora2pg.conf.dist) to /etc/ora2pg

Appending installation info to /usr/lib64/perl5/perllocal.pod

Сборка rpm пакетов из исходного кода DBD-Oracle-1.80, DBD-Pg-3.15.0 и ora2pg-23.0

-

создать директории сборки:

mkdir -p rpm_ora2pg/{BUILD,BUILDROOT,RPMS,SOURCES,SPECS,SRPMS} -

скопировать архивы с исходным кодом в папку

SOURCES:cp {DBD-Oracle-1.80,DBD-Pg-3.15.0,ora2pg-23.0}.tar.gz rpm_ora2pg/SOURCES -

скопировать файлы спецификаций для сборки в папку

SPECS:cp {ora2pg,DBD-Oracle,DBD-Pg}.spec rpm_ora2pg/SPECS -

выполнить сборку rpm пакетов:

rpmbuild --define "_topdir /home/user/rpm_ora2pg" -bb rpm_ora2pg/SPECS/*

В параметре _topdir необходимо указать полный путь к папке, в которой создана структура для сборки.

Если сборка прошла без ошибок, то собранные rpm пакеты будут лежать в директории rpm_ora2pg/RPMS.

Подготовка к работе

Проверка соединения

Выполнить проверку соединения к базе данных Oracle можно, если установить пакет sqlplus:

sudo yum -y install oracle-instantclient18.5-sqlplus-18.5.0.0.0-3.x86_64.rpm

Шаблон строки подключения к базе данных Oracle с помощью клиента sqlplus:

sqlplus {username}/{password}@{oracle DB address}:{oracle DB port}/{oracle DB sid}

Пример вывода при успешном подключении к консоли:

SQL*Plus: Release 18.0.0.0.0 - Production on Tue Apr 4 11:41:51 2023

Version 18.5.0.0.0

Copyright (c) 1982, 2018, Oracle. All rights reserved.

Last Successful login time: Tue Apr 04 2023 11:38:40 +03:00

Connected to:

Oracle Database 21c Express Edition Release 21.0.0.0.0 - Production

Version 21.3.0.0.0

SQL>

При попытке запуска соединения возможно появление ошибки:

sqlplus: error while loading shared libraries: libaio.so.1: cannot open shared object file: No such file or directory

В таком случае установите пакет libaio1:

sudo yum install libaio1 libaio-devel

sudo yum install readline-devel.x86_64

Создание шаблона миграции

ora2pg --project_base <директория хранения шаблона> --init_project <имя проекта>

Например:

ora2pg --project_base ./migration --init_project test_project

Пример вывода результата:

Creating project test_project.

./migration/test_project/

schema/

dblinks/

directories/

functions/

grants/

mviews/

packages/

partitions/

procedures/

sequences/

synonyms/

tables/

tablespaces/

triggers/

types/

views/

sources/

functions/

mviews/

packages/

partitions/

procedures/

triggers/

types/

views/

data/

config/

reports/

Generating generic configuration file

Creating script export_schema.sh to automate all exports.

Creating script import_all.sh to automate all imports.

Создание структуры в целевой БД

Существует два способа миграции схемы Oracle и других типов объектов (типы, процедуры, функции, последовательности и другие) в целевую базу данных Pangolin.

- Использование скрипта из состава ora2pg.

sh export_schema.sh

Пример вывода:

[========================>] 3/3 tables (100.0%) end of scanning.

[========================>] 6/6 objects types (100.0%) end of objects auditing.

Running: ora2pg -p -t SEQUENCE -o sequence.sql -b ./schema/sequences -c ./config/ora2pg.conf

[========================>] 0/0 sequences (100.0%) end of output.

Running: ora2pg -p -t TABLE -o table.sql -b ./schema/tables -c ./config/ora2pg.conf

[========================>] 1/1 tables (100.0%) end of scanning.

Retrieving table partitioning information...

[========================>] 1/1 tables (100.0%) end of table export.

Running: ora2pg -p -t PACKAGE -o package.sql -b ./schema/packages -c ./config/ora2pg.conf

[========================>] 0/0 packages (100.0%) end of output.

...

# Обработка всех типов

...

Running: ora2pg -t TYPE -o type.sql -b ./sources/types -c ./config/ora2pg.conf

[========================>] 0/0 types (100.0%) end of output.

Running: ora2pg -t MVIEW -o mview.sql -b ./sources/mviews -c ./config/ora2pg.conf

[========================>] 0/0 materialized views (100.0%) end of output.

To extract data use the following command:

ora2pg -t COPY -o data.sql -b ./data -c ./config/ora2pg.conf

Полученные в результате работы скрипта SQL файлы необходимо загрузить в целевую базу данных:

psql -f <FILENAME> -h <PGSE_master_host> -p <port> -U [db_admin] -d database

- Использование Liquibase.

Если в продукте используются Liquibase, необходимо подготовить инициализирующие SQL-скрипты и наборы изменений (модели) в формате liquibase.

Необходимые пакеты:

liquibase-3.8.0-bin.tar.gz;postgresql-42.2.6.jar.

Простой пример генерации набора изменений:

liquibase

--driver=oracle.jdbc.OracleDriver

--classpath=ojdbc14.jar

--url="jdbc:oracle:thin:@<IP OR HOSTNAME>:<PORT>:<SERVICE NAME OR SID>"

--changeLogFile=db.changelog-1.0.xml

--username=<USERNAME>

--password=<PASSWORD>generateChangeLog

Импорт изменений в базу данных Pangolin:

liquibase --driver=org.postgresql.Driver \

--classpath=/<директория с драйвером>/postgresql-42.2.6.jar \

--url=jdbc:postgresql://127.0.0.1:5432/dbname \

--username=user \

--password=password \

--changeLogFile=./core/changelog.xml \

--logLevel=info update

В случае использования парамеризованных скриптов, можно использовать следующие параметры:

-Duser_name=user;-Dtablespace_i=ts,

где внутри скрипта параметры используются в виде ${parameter_name}

Оценка миграции

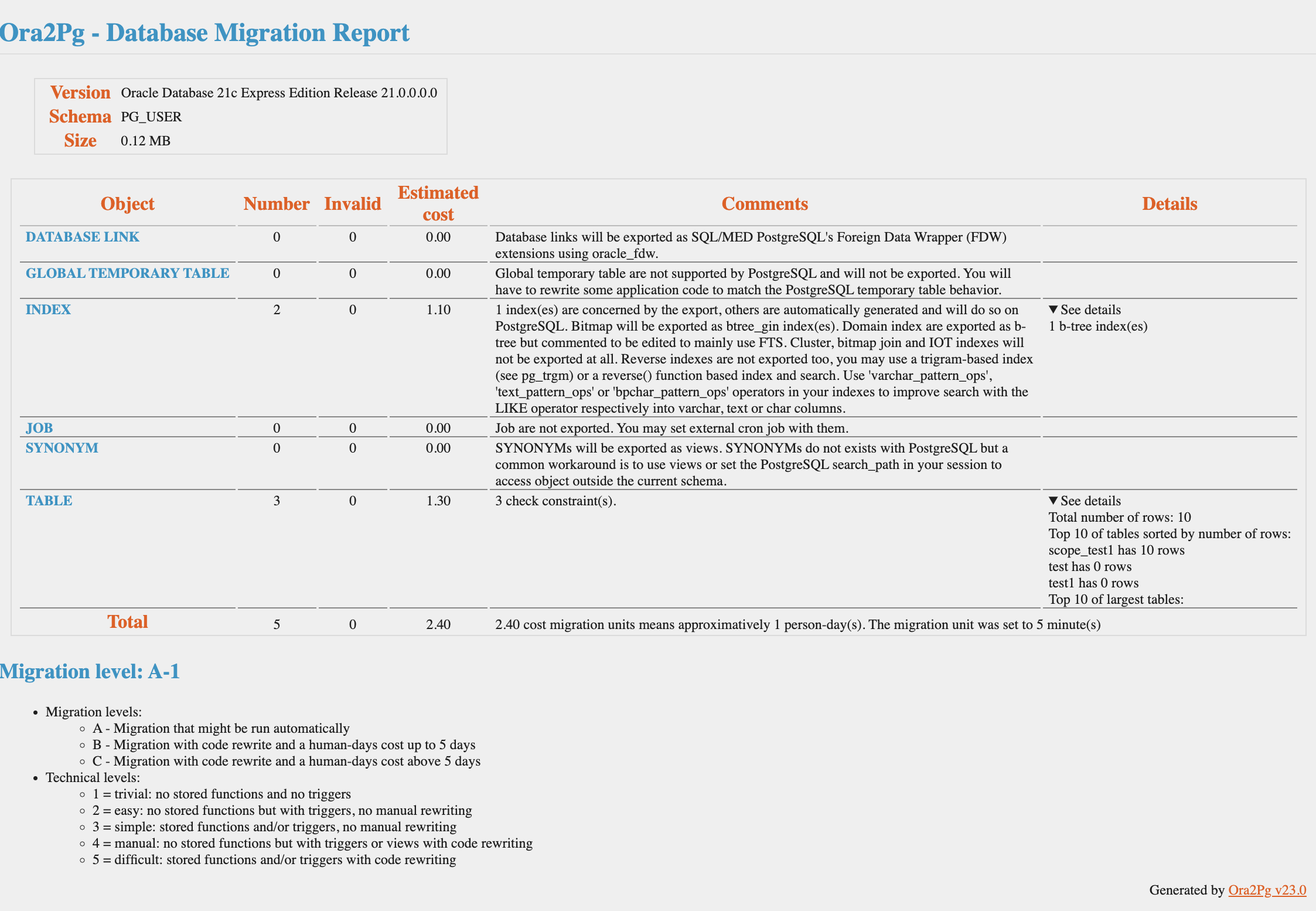

В результате работы скрипта export_schema.sh формируется отчет о миграции, который представляет собой отчет со списком всех объектов Oracle, предполагаемой стоимости миграции в днях разработчика и определенных объектов базы данных, которые могут потребовать особого внимания в процессе миграции.

Формирование отчета можно получить отдельно запуском утилиты с соответствующими параметрами.

Пример команды:

ora2pg -t SHOW_REPORT -c $namespace/config/ora2pg.conf --dump_as_html --cost_unit_value $unit_cost --estimate_cost > $namespace/reports/report.html

Пример отчета об оценке миграции в приложении Ora2Pg - Database Migration Report.

Настройка

Настройка параметров работы утилиты ora2pg выполняется установкой необходимых параметров в конфигурационном файле ora2pg.conf:

<ora2pg data folder>/<директория хранения шаблона>/<имя проекта>/config/ora2pg.conf

Основные параметры конфигурационного файла миграции ora2pg.conf

Подробное описание всех параметров представлено в официальной документации https://ora2pg.darold.net/documentation.html#CONFIGURATION или в Приложении к данному документу «Шаблон конфигурационного файла миграции ora2pg.conf».

-

параметры подключения к БД Oracle:

-

имя источника данных Oracle (DSN, Data Source Name); утилита ora2pg не поддерживает подключение к кластеру Oracle (ADG), поэтому в строке подключения указывается не более одного источника данных.

ORACLE_DSN – примеры возможных значений:

ORACLE_DSN dbi:Oracle:host={oracle_host_address};sid={oracle_db_sid};port={oracle_db_port}или

ORACLE_DSN dbi:Oracle:host={oracle_host_address};service_name={oracle_db_sn};port={oracle_db_port} -

имя пользователя и пароль для подключения к базе данных Oracle:

ORACLE_USER {username}

ORACLE_PWD {password}

-

-

режим отладки (опционально):

DEBUG 1 -

имя схемы Oracle:

SCHEMA <oracle_schema_name> -

имя схемы PostgreSQL:

PG_SCHEMA <pg_schema_name> -

определение клиентской кодировки на стороне Oracle и PostgreSQL:

NLS_LANG AMERICAN_AMERICA.AL32UTF8

NLS_NCHAR AL32UTF8

CLIENT_ENCODING UTF8 -

тип экспорта и фильтры:

TYPE <тип_экспорта> -

объекты экспорта; параметр определяет таблицы, которые нужно переносить; по умолчанию Ora2Pg экспортирует все объекты; значение должно быть представлено списком имен объектов или регулярных выражений, разделенных пробелом:

ALLOW TABLE_TESTПример:

ALLOW EMPLOYEES SALE_.* COUNTRIES .*_GEOM_SEQДля получения значений параметра ALLOW для текущей базы данных можно использовать пример скрипта; в результате работы скрипт возвращает строку, в которой через запятую перечислены все таблицы для выбранной схемы; вывод скрипта можно использовать в качестве значения параметра ALLOW:

DO $$

declare

text_out text;

t_name text;

begin

-- Пример задания условий

for t_name in select distinct table_name from information_schema.columns where table_schema='<schema name>' and table_name<>'<tablename>' and table_name<>'<tablename>' and table_name not like '%pg_%' and table_name not like '%cci_%'

loop

text_out=concat(text_out,t_name,' ');

end loop;

RAISE INFO'%',text_out;

end$$;Вариант в виде функции для регулярного использования:

create or replace function concat_table() returns text AS $$

declare

text_out text;

t_name text;

i int;

begin

-- Пример задания условий

for t_name in select distinct table_name from information_schema.columns where table_schema='<schema name>' and table_name<>'<tablename>' and table_name<>'<tablename>' and table_name not like '%pg_%' and table_name not like '%cci_%'

loop

text_out=concat(text_out,t_name,' ');

end loop;

return text_out;

end;

$$ language 'plpgsql'

select * from concat_table(); -

параметры подключения к базе данных Pangolin, в случае вывода напрямую в базу данных:

-

имя источника данных Pangolin (DSN, Data Source Name):

PG_DSN dbi:Pg:dbname={pangolin_db_sid};host={pangolin_host_address};port={pangolin_db_port}Если параметр

PG_DSNне задан, выгрузка будет выполняться в файл, заданный параметромOUTPUT. -

имя пользователя и пароль для подключения к базе данных Pangolin:

PG_USER {username}

PG_PWD {password}

-

-

имя файла для сохранения экспорта:

По умолчанию результат выводится в STDOUT, если он не отправляется в базу данных Pangolin. Если требуется сжатие Gzip, нужно добавить расширение

.gzк имени файла (используется Perl-модуль Compress::Zlib), и расширение.bz2для использования сжатия Bzip2.OUTPUT output.sql -

тип данных в Pangolin для замены типа

NUMBER()без точности; этот тип преобразуются по умолчанию вbigint, если параметрPG_INTEGER_TYPEимеет значениеtrue:DEFAULT_NUMERIC numeric -

параметр, определяющий замену

NUMBER(1,0)наBOOLEAN:REPLACE_AS_BOOLEAN NUMBER:1 -

количество одновременно извлекаемых кортежей:

DATA_LIMIT 10000 -

таблицы с набором столбцов, выбранные для извлечения или переноса; используется при известных отличиях в структуре и только с типом экспорта

INSERTилиCOPY; поля разделены пробелом или запятой:MODIFY_STRUCT TABLE_TEST(dico,dossier)Пример настройки параметра:

MODIFY_STRUCT t_deals_accesstool(object_id,plasticcard_id,sberbook_id,party_id,type_id,numbermask,product_id,locking,daterelease,begindate,enddate,reason_id,role,code,sourcesystemid,sourceid,templobjid,parentobjid,name,category_id,statushistory,chgcnt,status_id,offflag,sys_isdeleted,sys_partitionid,sys_lastchangedate,sys_ownerid,sys_affinityrootid,sys_recmodelversion) t_deals_accesstooldynamicparam(object_id,parentparam_id,accesstool_id,isrequired,readonly,paramtype_id,value_,keepinworkflow,code,sourcesystemid,sourceid,templobjid,parentobjid,name,category_id,statushistory,chgcnt,status_id,offflag,sys_isdeleted,sys_partitionid,sys_lastchangedate,sys_ownerid,sys_affinityrootid,sys_recmodelversion)Для получения значений параметра MODIFY_STRUCT для текущей базы данных можно использовать скрипт. В результате работы скрипт возвращает строку в формате:

<имя таблицы1>(<поле1>,<поле2>,....) <имя таблицы2>(<поле1>,<поле2>,....) .....Условие отбора таблиц в примере - наличие столбца

statushistory.Вывод может быть использован в качестве значения параметра

MODIFY_STRUCTв конфигурационный файл ora2pg (ora2pg.conf).DO $$

declare

text_out text;

t_name text;

t_column text;

begin

for t_name in select table_name from information_schema.columns where column_name='statushistory'

loop

for t_column in select array_to_string(array(select column_name from information_schema.columns where information_schema.columns.table_name=t_name),',')

loop

text_out=concat(text_out,t_name,'(',t_column,') ');

end loop;

end loop;

RAISE INFO '%',text_out;

end$$;Вариант в виде функции для регулярного использования:

create or replace function concat_column() returns text AS $$

declare

text_out text;

t_name text;

t_column text;

i int;

begin

for t_name in select table_name from information_schema.columns where column_name='statushistory'

loop

for t_column in select array_to_string(array(select column_name from information_schema.columns where information_schema.columns.table_name=t_name),',')

loop

text_out=concat(text_out,t_name,'(',t_column,') ');

raise notice '%s(%s)',t_name,t_column;

end loop;

end loop;

return text_out;

end;

$$ language 'plpgsql'

select * from concat_column(); -

имена таблиц для замены; дополнительно к параметрам

ALLOWиMODIFY_STRUCTпри известном несоответствии имен таблиц в источнике и приемнике можно использовать параметрREPLACE_TABLES:В примере таблицы

ORIG_TBNAME1иORIG_TBNAME2базы данных Oracle будут заменены в целевой базе данных Pangolin наDEST_TBNAME1иDEST_TBNAME2соответственно:REPLACE_TABLES ORIG_TBNAME1:DEST_TBNAME1 ORIG_TBNAME2:DEST_TBNAME2Список таблиц разделяется пробелом или запятой.

-

имена колонок таблиц для замены; список таблиц и столбцов разделяются запятой:

REPLACE_COLS ORIG_TBNAME(ORIG_COLNAME1:NEW_COLNAME1,ORIG_COLNAME2:NEW_COLNAME2) -

настройка функциональности по инкрементальному копированию DATADIFF (удаление и вставка только фактически измененных данных):

В секции DATADIFF представлены настройки экспериментальной функции для инкрементального копирования. Для корректной работы потребуется вручную сформировать файл инкремента, в котором распределить данные для вставки, обновления и удаления между таблицами с суффиксами:

_insдля вставки;_updдля обновления;_delдля удаления.

Порядок работы:

-

выборка удаляемых строк в промежуточную таблицу с суффиксом

_del; -

выборка вставляемых значений в таблицу с суффиксом

_ins; -

сравнение на уровне Pangolin таблиц

_delи_ins; -

удаление идентичных строк из таблиц

_delи_insдля того, чтобы не удалять данные из реальной таблицы, а только для повторной вставки; -

удаление оставшихся в таблице

_delстрок из реальной таблицы; -

вставка оставшихся строк из таблицы

_insв реальную таблицу; -

если есть PRIMARY KEY в реальной таблице, обнаружение строк, которые не идентичны в

_delи_ins, но имеют одинаковое значение для первичного ключа; -

перезапись их в

UPDATE, не вDELETE, после чего последуетINSERT; эти обновления хранятся в промежуточной таблице с суффиксом_updи удаляются из таблиц_delи_insдо их соответствующего выполнения. -

включение функциональности DATADIFF (

0– выключено,1– включено):DATADIFF 0 -

применение первичного ключа; использовать при

UPDATE, когда изменяемые строки могут быть сравнены, используя первичный ключ;1– включено:DATADIFF_UPDATE_BY_PKEY 0 -

имена суффиксов:

DATADIFF_DEL_SUFFIX _del

DATADIFF_UPD_SUFFIX _upd

DATADIFF_INS_SUFFIX _ins -

включение использования параметров

work_memиtemp_buffersдля хранения временных таблиц в памяти, эффективной сортировки:DATADIFF_WORK_MEM 256 MB

DATADIFF_TEMP_BUFFERS 512 MB -

имена функций, которые будут вызваны:

- перед началом операции DELETE и INSERT (

DATADIFF_BEFORE); - после началом операции DELETE и INSERT (

DATADIFF_AFTER); - после всей операции миграции (после COMMIT) (

DATADIFF_AFTER_ALL).

DATADIFF_BEFORE my_datadiff_handler_function

DATADIFF_AFTER my_datadiff_handler_function

DATADIFF_AFTER_ALL my_datadiff_bunch_handler_function - перед началом операции DELETE и INSERT (

Функционал инкрементального копирования не работает с партиционированными таблицами или параллельным выполнением.

Все данные извлекаются из базы данных Oracle при каждом запуске. В Pangolin идентичные строки будут уничтожены для того, чтобы в целевых таблицах не возникали лишниеоперации DELETE и INSERT. Для регулярной миграции данных требуется указать конфигурацию DELETE в дополнение к конфигурации WHERE. Если условие WHERE отсутствует,необходимо выполнить DELETE с условием 1=1 или подобным.

Параметры конфигурации, не описанные в данном разделе, используются по необходимости.

Подробное описание всех параметров представлено в официальной документации https://ora2pg.darold.net/documentation.html#CONFIGURATION или в Приложении к данному документу «Шаблон конфигурационного файла миграции ora2pg.conf».

Использование модуля

Перед началом использования модуля проверьте корректность настроек подключения к исходной базе данных Oracle.

Для проверки настроек подключения можно запустить утилиту с параметрами:

ora2pg -t SHOW_VERSION -c config/ora2pg.conf

В случае успешного подключения выводится следующий пример результата:

Oracle Database 21c Express Edition Release 21.0.0.0.0

В случае неудачного подключения получаем следующий пример ошибки:

FATAL: 12505 ... ORA-12505: TNS:listener does not currently know of SID given in connect descriptor (DBD ERROR: OCIServerAttach)

Aborting export...

Данная ошибка говорит о том, что в параметре ORACLE_DSN конфигурационного файла указан неверный SID базы данных и для успешного подключения необходимо указать service_name.

Запуск ora2pg

Для миграции необходимо запустить утилиту ora2pg с необходимыми параметрами.

Полный список и подробное описание всех параметров представлено в официальной документации https://ora2pg.darold.net/documentation.html#Ora2Pg-usage и в Приложении «Параметры запуска утилиты».

ora2pg -o data.sql -b ./data -c ./config/ora2pg.conf -j 16 -J 16

Пример вывода в консоли:

[========================>] 1/1 tables (100.0%) end of scanning.

[========================>] 150641/137483 rows (109.6%) Table T_DEALS_RATE (22 sec., 6847 recs/sec)

[========================>] 150641/137483 rows (109.6%) on total estimated data (22 sec., avg: 6847 tuples/sec)

Примеры

Миграция через выгрузку в файл

Установить значение параметра TYPE = TABLE.

ora2pg -o data.sql -b ./data -c ./config/ora2pg.conf -j 16 -J 16

Пример вывода результата в консоль:

[========================>] 1/1 tables (100.0%) end of scanning.

Retrieving table partitioning information...

[========================>] 1/1 tables (100.0%) end of table export.

В результате работы утилиты сформированы файлы:

-

./data/CONSTRAINTS_data.sql;Данный файл формируется в случае установки параметру

FILE_PER_CONSTRAINTзначения1. Пример содержимого при отсутствии ограничений на выгружаемой таблице:-- Generated by Ora2Pg, the Oracle database Schema converter, version 23.0

-- Copyright 2000-2021 Gilles DAROLD. All rights reserved.

-- DATASOURCE: dbi:Oracle:host=srv-0-216;service_name=xepdb1;port=1521

SET client_encoding TO 'UTF8';

\set ON_ERROR_STOP ON

-- Nothing found of type constraints -

./data/data.sql;Имя файла выгрузки структуры

data.sqlзадано в строке запуска с помощью ключа-o(--out file). Файл можно использовать для создания структуры в целевой базе данных.Пример структуры данных:

-- Generated by Ora2Pg, the Oracle database Schema converter, version 23.0

-- Copyright 2000-2021 Gilles DAROLD. All rights reserved.

-- DATASOURCE: dbi:Oracle:host=srv-0-216;service_name=xepdb1;port=1521

SET client_encoding TO 'UTF8';

\set ON_ERROR_STOP ON

CREATE TABLE scope_test1 (

col1 bigint NOT NULL,

col2 varchar(128) NOT NULL

) ; -

./data/INDEXES_data.sql.Данный файл формируется в случае установки параметру

FILE_PER_INDEXзначения1. Пример содержимого при наличии одного индекса на выгружаемой таблице:-- Generated by Ora2Pg, the Oracle database Schema converter, version 23.0

-- Copyright 2000-2021 Gilles DAROLD. All rights reserved.

-- DATASOURCE: dbi:Oracle:host=srv-0-216;service_name=xepdb1;port=1521

SET client_encoding TO 'UTF8';

\set ON_ERROR_STOP ON

CREATE INDEX scope_test1_col1_col2_idx ON scope_test1 (col1, col2);

Для выгрузки непосредственно самих данных установить для параметра TYPE значение COPY в конфигурационном файле или запустить с ключом -t (--type export). Ключ имеет приоритет, то есть значение параметра TYPE в конфигурационном файле будет проигнорировано.

ora2pg -o data.sql -b ./data -c ./config/ora2pg.conf -j 16 -J 16

Пример вывода результата в консоль:

[========================>] 1/1 tables (100.0%) end of scanning.

[========================>] 10/14 rows (100.0%) Table SCOPE_TEST1 (0 sec., 14 recs/sec)

[========================>] 10/14 rows (100.0%) on total estimated data (1 sec., avg: 14 tuples/sec)

В результате работы утилиты сформированы файлы:

-

./data/data.sql;Файл для загрузки данных в целевую данных Pangolin. Пример содержимого:

BEGIN;

ALTER TABLE scope_test1 DISABLE TRIGGER USER;

\i ./data/SCOPE_TEST1_data.sql

ALTER TABLE scope_test1 ENABLE TRIGGER USER;

COMMIT; -

./data/SCOPE_TEST1_data.sql.Содержимое файла с данными из выбранной таблицы (параметр

ALLOW = SCOPE_TEST1) с предварительной очисткой (параметрTRUNCATE_TABLE = 1):SET client_encoding TO 'UTF8';

SET synchronous_commit TO off;

TRUNCATE TABLE scope_test1;

COPY scope_test1 (col1,col2) FROM STDIN;

32 BUFFER_POOL DEFAULT FLASH_CACHE

74 POOL DEFAULT FLASH_CACHE

84 GROUPS POOL DEFAULT FLASH_CACHE

297 GROUPS 1 BUFFER_POOL DEFAULT

297 GROUPS 1 BUFFER_POOL DEFAULT FLASH_CACHE

401 BUFFER_POOL

402 POOL DEFAULT

403 GROUPS 1

404 GROUPS POOL

532 BUFFER_POOL DEFAULT

574 POOL DEFAULT

584 GROUPS POOL DEFAULT

734 GROUPS 1 BUFFER_POOL DEFAULT FLASH_CACHE

4034 FREELIST GROUPS 1 BUFFER_POOL DEFAULT FLASH_CACHE

\.

Прямая миграция

При задании конфигурационных параметров PG_DSN, PG_USER, PG_PWD и TYPE = COPY загрузка данных будет выполнена напрямую в целевую базу данных. Значение для параметра TYPE можно установить в строке запуска утилиты при помощи ключа -t (--type export), который имеет приоритет. При этом значение параметра TYPE в конфигурационном файле будет проигнорировано.

ora2pg -b ./data -c ./config/ora2pg.conf -j 16 -J 16

Пример вывода результата в консоль:

[========================>] 1/1 tables (100.0%) end of scanning.

[========================>] 10/10 rows (100.0%) Table SCOPE_TEST1 (0 sec., 10 recs/sec)

[========================>] 10/10 rows (100.0%) on total estimated data (1 sec., avg: 10 tuples/sec)

Проверка результата на целевой базе данных Pangolin подтверждает успешную миграцию данных из источника.

Возможные ошибки и проблемы

-

В Oracle отсутствует логическоий тип данных. В PostgreSQL есть

boolean. Перенос полей типаNUMBERиз Oracle в качестве логического типа можно выполнить с помощью хранимой процедуры, которая меняет тип в PostgreSQL наboolean. При этом необходимо в параметреREPLACE_AS_BOOLEANперечислить поля, которые должны попадать под это условие, так как не все данные, равные0или1являются логическими. -

Импорт материализованных представлений напрямую представляет сложность потому, что нет источника данных. Для решения этой проблемы создайте

VIEW, которое содержит все поля материализованного представления, и перенесите созданное представление как таблицу.

Ссылки на документацию разработчика

Дополнительно поставляемый модуль ora2pg: https://ora2pg.darold.net/documentation.html.

Приложения

Ora2Pg - Database Migration Report

Параметры запуска утилиты

Usage: ora2pg [-dhpqv --estimate_cost --dump_as_html] [--option value]

-a | --allow str : Comma separated list of objects to allow from export. Can be used with SHOW_COLUMN too.

-b | --basedir dir: Set the default output directory, where files resulting from exports will be stored.

-c | --conf file : Set an alternate configuration file other than the default /etc/ora2pg/ora2pg.conf.

-d | --debug : Enable verbose output.

-D | --data_type str : Allow custom type replacement at command line.

-e | --exclude str: Comma separated list of objects to exclude from export. Can be used with SHOW_COLUMN too.

-h | --help : Print this short help.

-g | --grant_object type : Extract privilege from the given object type. See possible values with GRANT_OBJECT configuration.

-i | --input file : File containing Oracle PL/SQL code to convert with no Oracle database connection initiated.

-j | --jobs num : Number of parallel process to send data to PostgreSQL.

-J | --copies num : Number of parallel connections to extract data from Oracle.

-l | --log file : Set a log file. Default is stdout.

-L | --limit num : Number of tuples extracted from Oracle and stored in memory before writing, default: 10000.

-m | --mysql : Export a MySQL database instead of an Oracle schema.

-n | --namespace schema : Set the Oracle schema to extract from.

-N | --pg_schema schema : Set PostgreSQL's search_path.

-o | --out file : Set the path to the output file where SQL will be written. Default: output.sql in running directory.

-p | --plsql : Enable PLSQL to PLPGSQL code conversion.

-P | --parallel num: Number of parallel tables to extract at the same time.

-q | --quiet : Disable progress bar.

-r | --relative : use \ir instead of \i in the psql scripts generated.

-s | --source DSN : Allow to set the Oracle DBI datasource.

-t | --type export: Set the export type. It will override the one given in the configuration file (TYPE).

-T | --temp_dir dir: Set a distinct temporary directory when two or more ora2pg are run in parallel.

-u | --user name : Set the Oracle database connection user. ORA2PG_USER environment variable can be used instead.

-v | --version : Show Ora2Pg Version and exit.

-w | --password pwd : Set the password of the Oracle database user. ORA2PG_PASSWD environment variable can be used instead.

-W | --where clause : Set the WHERE clause to apply to the Oracle query to retrieve data. Can be used multiple time.

--forceowner : Force ora2pg to set tables and sequences owner like in Oracle database. If the value is set to a username this one will be used as the objects owner. By default it's the user used to connect to the Pg database that will be the owner.

--nls_lang code : Set the Oracle NLS_LANG client encoding.

--client_encoding code: Set the PostgreSQL client encoding.

--view_as_table str: Comma separated list of views to export as table.

--estimate_cost : Activate the migration cost evaluation with SHOW_REPORT

--cost_unit_value minutes: Number of minutes for a cost evaluation unit. default: 5 minutes, corresponds to a migration conducted by a PostgreSQL expert. Set it to 10 if this is your first migration.

--dump_as_html : Force ora2pg to dump report in HTML, used only with SHOW_REPORT. Default is to dump report as simple text.

--dump_as_csv : As above but force ora2pg to dump report in CSV.

--dump_as_sheet : Report migration assessment with one CSV line per database.

--init_project name: Initialise a typical ora2pg project tree. Top directory will be created under project base dir.

--project_base dir : Define the base dir for ora2pg project trees. Default is current directory.

--print_header : Used with --dump_as_sheet to print the CSV header especially for the first run of ora2pg.

--human_days_limit num : Set the number of human-days limit where the migration assessment level switch from B to C. Default is set to 5 human-days.

--audit_user list : Comma separated list of usernames to filter queries in the DBA_AUDIT_TRAIL table. Used only with SHOW_REPORT and QUERY export type.

--pg_dsn DSN : Set the datasource to PostgreSQL for direct import.

--pg_user name : Set the PostgreSQL user to use.

--pg_pwd password : Set the PostgreSQL password to use.

--count_rows : Force ora2pg to perform a real row count in TEST action.

--no_header : Do not append Ora2Pg header to output file

--oracle_speed : Use to know at which speed Oracle is able to send data. No data will be processed or written.

--ora2pg_speed : Use to know at which speed Ora2Pg is able to send transformed data. Nothing will be written.

--blob_to_lo : export BLOB as large objects, can only be used with action SHOW_COLUMN, TABLE and INSERT.

See full documentation at https://ora2pg.darold.net/ for more help or see

manpage with 'man ora2pg'.

ora2pg will return 0 on success, 1 on error. It will return 2 when a child

process has been interrupted and you've gotten the warning message:

"WARNING: an error occurs during data export. Please check what's happen."

Most of the time this is an OOM issue, first try reducing DATA_LIMIT value.

Шаблон конфигурационного файла миграции ora2pg.conf

#################### Ora2Pg Configuration file #####################

# Support for including a common config file that may contain any

# of the following configuration directives.

#IMPORT common.conf

#------------------------------------------------------------------------------

# INPUT SECTION (Oracle connection or input file)

#------------------------------------------------------------------------------

# Set this directive to a file containing PL/SQL Oracle Code like function,

# procedure or a full package body to prevent Ora2Pg from connecting to an

# Oracle database end just apply his conversion tool to the content of the

# file. This can only be used with the following export type: PROCEDURE,

# FUNCTION or PACKAGE. If you don't know what you do don't use this directive.

#INPUT_FILE ora_plsql_src.sql

# Set the Oracle home directory

ORACLE_HOME /usr/lib/oracle/18.5/client64

# Set Oracle database connection (datasource, user, password)

ORACLE_DSN dbi:Oracle:host=mydb.mydom.fr;sid=SIDNAME;port=1521

ORACLE_USER system

ORACLE_PWD manager

# Set this to 1 if you connect as simple user and can not extract things

# from the DBA_... tables. It will use tables ALL_... This will not works

# with GRANT export, you should use an Oracle DBA username at ORACLE_USER

USER_GRANTS 1

# Trace all to stderr

DEBUG 0

# This directive can be used to send an initial command to Oracle, just after

# the connection. For example to unlock a policy before reading objects or

# to set some session parameters. This directive can be used multiple time.

#ORA_INITIAL_COMMAND

#------------------------------------------------------------------------------

# SCHEMA SECTION (Oracle schema to export and use of schema in PostgreSQL)

#------------------------------------------------------------------------------

# Export Oracle schema to PostgreSQL schema

EXPORT_SCHEMA 0

# Oracle schema/owner to use

SCHEMA CHANGE_THIS_SCHEMA_NAME

# Enable/disable the CREATE SCHEMA SQL order at starting of the output file.

# It is enable by default and concern on TABLE export type.

CREATE_SCHEMA 1

# Enable this directive to force Oracle to compile schema before exporting code.

# When this directive is enabled and SCHEMA is set to a specific schema name,

# only invalid objects in this schema will be recompiled. If SCHEMA is not set

# then all schema will be recompiled. To force recompile invalid object in a

# specific schema, set COMPILE_SCHEMA to the schema name you want to recompile.

# This will ask to Oracle to validate the PL/SQL that could have been invalidate

# after a export/import for example. The 'VALID' or 'INVALID' status applies to

# functions, procedures, packages and user defined types.

COMPILE_SCHEMA 1

# By default if you set EXPORT_SCHEMA to 1 the PostgreSQL search_path will be

# set to the schema name exported set as value of the SCHEMA directive. You can

# defined/force the PostgreSQL schema to use by using this directive.

#

# The value can be a comma delimited list of schema but not when using TABLE

# export type because in this case it will generate the CREATE SCHEMA statement

# and it doesn't support multiple schema name. For example, if you set PG_SCHEMA

# to something like "user_schema, public", the search path will be set like this

# SET search_path = user_schema, public;

# forcing the use of an other schema (here user_schema) than the one from Oracle

# schema set in the SCHEMA directive. You can also set the default search_path

# for the PostgreSQL user you are using to connect to the destination database

# by using:

# ALTER ROLE username SET search_path TO user_schema, public;

#in this case you don't have to set PG_SCHEMA.

#PG_SCHEMA

# Use this directive to add a specific schema to the search path to look

# for PostGis functions.

#POSTGIS_SCHEMA

# Allow to add a comma separated list of system user to exclude from

# Oracle extraction. Oracle have many of them following the modules

# installed. By default it will suppress all object owned by the following

# system users:

# 'SYSTEM','CTXSYS','DBSNMP','EXFSYS','LBACSYS','MDSYS','MGMT_VIEW',

# 'OLAPSYS','ORDDATA','OWBSYS','ORDPLUGINS','ORDSYS','OUTLN',

# 'SI_INFORMTN_SCHEMA','SYS','SYSMAN','WK_TEST','WKSYS','WKPROXY',

# 'WMSYS','XDB','APEX_PUBLIC_USER','DIP','FLOWS_020100','FLOWS_030000',

# 'FLOWS_040100','FLOWS_010600','FLOWS_FILES','MDDATA','ORACLE_OCM',

# 'SPATIAL_CSW_ADMIN_USR','SPATIAL_WFS_ADMIN_USR','XS$NULL','PERFSTAT',

# 'SQLTXPLAIN','DMSYS','TSMSYS','WKSYS','APEX_040000','APEX_040200',

# 'DVSYS','OJVMSYS','GSMADMIN_INTERNAL','APPQOSSYS','DVSYS','DVF',

# 'AUDSYS','APEX_030200','MGMT_VIEW','ODM','ODM_MTR','TRACESRV','MTMSYS',

# 'OWBSYS_AUDIT','WEBSYS','WK_PROXY','OSE$HTTP$ADMIN',

# 'AURORA$JIS$UTILITY$','AURORA$ORB$UNAUTHENTICATED',

# 'DBMS_PRIVILEGE_CAPTURE','CSMIG','MGDSYS','SDE','DBSFWUSER'

# Other list of users set to this directive will be added to this list.

#SYSUSERS OE,HR

# List of schema to get functions/procedures meta information that are used

# in the current schema export. When replacing call to function with OUT

# parameters, if a function is declared in an other package then the function

# call rewriting can not be done because Ora2Pg only know about functions

# declared in the current schema. By setting a comma separated list of schema

# as value of this directive, Ora2Pg will look forward in these packages for

# all functions/procedures/packages declaration before proceeding to current

# schema export.

#LOOK_FORWARD_FUNCTION SCOTT,OE

# Force Ora2Pg to not look for function declaration. Note that this will prevent

# Ora2Pg to rewrite function replacement call if needed. Do not enable it unless

# looking forward at function breaks other export.

NO_FUNCTION_METADATA 0

#------------------------------------------------------------------------------

# ENCODING SECTION (Define client encoding at Oracle and PostgreSQL side)

#------------------------------------------------------------------------------

# Enforce default language setting following the Oracle database encoding. This

# may be used with multibyte characters like UTF8. Here are the default values

# used by Ora2Pg, you may not change them unless you have problem with this

# encoding. This will set $ENV{NLS_LANG} to the given value.

#NLS_LANG AMERICAN_AMERICA.AL32UTF8

# This will set $ENV{NLS_NCHAR} to the given value.

#NLS_NCHAR AL32UTF8

# By default PostgreSQL client encoding is automatically set to UTF8 to avoid

# encoding issue. If you have changed the value of NLS_LANG you might have to

# change the encoding of the PostgreSQL client.

#CLIENT_ENCODING UTF8

# To force utf8 encoding of the PL/SQL code exported, enable this directive.

# Could be helpful in some rare condition.

FORCE_PLSQL_ENCODING 0

#------------------------------------------------------------------------------

# EXPORT SECTION (Export type and filters)

#------------------------------------------------------------------------------

# Type of export. Values can be the following keyword:

# TABLE Export tables, constraints, indexes, ...

# PACKAGE Export packages

# INSERT Export data from table as INSERT statement

# COPY Export data from table as COPY statement

# VIEW Export views

# GRANT Export grants

# SEQUENCE Export sequences

# TRIGGER Export triggers

# FUNCTION Export functions

# PROCEDURE Export procedures

# TABLESPACE Export tablespace (PostgreSQL >= 8 only)

# TYPE Export user defined Oracle types

# PARTITION Export range or list partition (PostgreSQL >= v8.4)

# FDW Export table as foreign data wrapper tables

# MVIEW Export materialized view as snapshot refresh view

# QUERY Convert Oracle SQL queries from a file.

# KETTLE Generate XML ktr template files to be used by Kettle.

# DBLINK Generate oracle foreign data wrapper server to use as dblink.

# SYNONYM Export Oracle's synonyms as views on other schema's objects.

# DIRECTORY Export Oracle's directories as external_file extension objects.

# LOAD Dispatch a list of queries over multiple PostgreSQl connections.

# TEST perform a diff between Oracle and PostgreSQL database.

# TEST_COUNT perform only a row count between Oracle and PostgreSQL tables.

# TEST_VIEW perform a count on both side of number of rows returned by views

# TEST_DATA perform data validation check on rows at both sides.

TYPE TABLE

# Set this to 1 if you don't want to export comments associated to tables and

# column definitions. Default is enabled.

DISABLE_COMMENT 0

# Set which object to export from. By default Ora2Pg export all objects.

# Value must be a list of object name or regex separated by space. Note

# that regex will not works with 8i database, use % placeholder instead

# Ora2Pg will use the LIKE operator. There is also some extended use of

# this directive, see chapter "Limiting object to export" in documentation.

#ALLOW TABLE_TEST

# Set which object to exclude from export process. By default none. Value

# must be a list of object name or regexp separated by space. Note that regex

# will not works with 8i database, use % placeholder instead Ora2Pg will use

# the NOT LIKE operator. There is also some extended use of this directive,

# see chapter "Limiting object to export" in documentation.

#EXCLUDE OTHER_TABLES

# Set which view to export as table. By default none. Value must be a list of

# view name or regexp separated by space. If the object name is a view and the

# export type is TABLE, the view will be exported as a create table statement.

# If export type is COPY or INSERT, the corresponding data will be exported.

#VIEW_AS_TABLE VIEW_NAME

# By default Ora2Pg try to order views to avoid error at import time with

# nested views. With a huge number of view this can take a very long time,

# you can bypass this ordering by enabling this directive.

NO_VIEW_ORDERING 0

# When exporting GRANTs you can specify a comma separated list of objects

# for which privilege will be exported. Default is export for all objects.

# Here are the possibles values TABLE, VIEW, MATERIALIZED VIEW, SEQUENCE,

# PROCEDURE, FUNCTION, PACKAGE BODY, TYPE, SYNONYM, DIRECTORY. Only one object

# type is allowed at a time. For example set it to TABLE if you just want to

# export privilege on tables. You can use the -g option to overwrite it.

# When used this directive prevent the export of users unless it is set to

# USER. In this case only users definitions are exported.

#GRANT_OBJECT TABLE

# By default Ora2Pg will export your external table as file_fdw tables. If

# you don't want to export those tables at all, set the directive to 0.

EXTERNAL_TO_FDW 1

# Add a TRUNCATE TABLE instruction before loading data on COPY and INSERT

# export. When activated, the instruction will be added only if there's no

# global DELETE clause or one specific to the current table (see bellow).

TRUNCATE_TABLE 1

# Support for include a DELETE FROM ... WHERE clause filter before importing

# data and perform a delete of some lines instead of truncatinf tables.

# Value is construct as follow: TABLE_NAME[DELETE_WHERE_CLAUSE], or

# if you have only one where clause for all tables just put the delete

# clause as single value. Both are possible too. Here are some examples:

#DELETE 1=1 # Apply to all tables and delete all tuples

#DELETE TABLE_TEST[ID1='001'] # Apply only on table TABLE_TEST

#DELETE TABLE_TEST[ID1='001' OR ID1='002] DATE_CREATE > '2001-01-01' TABLE_INFO[NAME='test']

# The last applies two different delete where clause on tables TABLE_TEST and

# TABLE_INFO and a generic delete where clause on DATE_CREATE to all other tables.

# If TRUNCATE_TABLE is enabled it will be applied to all tables not covered by

# the DELETE definition.

# When enabled this directive forces ora2pg to export all tables, index

# constraints, and indexes using the tablespace name defined in Oracle database.

# This works only with tablespaces that are not TEMP, USERS and SYSTEM.

USE_TABLESPACE 0

# Enable this directive to reorder columns and minimized the footprint

# on disk, so that more rows fit on a data page, which is the most important

# factor for speed. Default is same order than in Oracle table definition,

# that should be enough for most usage.

REORDERING_COLUMNS 0

# Support for include a WHERE clause filter when dumping the contents

# of tables. Value is construct as follow: TABLE_NAME[WHERE_CLAUSE], or

# if you have only one where clause for each table just put the where

# clause as value. Both are possible too. Here are some examples:

#WHERE 1=1 # Apply to all tables

#WHERE TABLE_TEST[ID1='001'] # Apply only on table TABLE_TEST

#WHERE TABLE_TEST[ID1='001' OR ID1='002] DATE_CREATE > '2001-01-01' TABLE_INFO[NAME='test']

# The last applies two different where clause on tables TABLE_TEST and

# TABLE_INFO and a generic where clause on DATE_CREATE to all other tables

# Sometime you may want to extract data from an Oracle table but you need a

# a custom query for that. Not just a "SELECT * FROM table" like Ora2Pg does

# but a more complex query. This directive allows you to override the query

# used by Ora2Pg to extract data. The format is TABLENAME[SQL_QUERY].

# If you have multiple tables to extract by replacing the Ora2Pg query, you can

# define multiple REPLACE_QUERY lines.

#REPLACE_QUERY EMPLOYEES[SELECT e.id,e.fisrtname,lastname FROM EMPLOYEES e JOIN EMP_UPDT u ON (e.id=u.id AND u.cdate>'2014-08-01 00:00:00')]

# To add a DROP <OBJECT> IF EXISTS before creating the object, enable

# this directive. Can be useful in an iterative work. Default is disabled.

DROP_IF_EXISTS 0

#------------------------------------------------------------------------------

# FULL TEXT SEARCH SECTION (Control full text search export behaviors)

#------------------------------------------------------------------------------

# Force Ora2Pg to translate Oracle Text indexes into PostgreSQL indexes using

# pg_trgm extension. Default is to translate CONTEXT indexes into FTS indexes

# and CTXCAT indexes using pg_trgm. Most of the time using pg_trgm is enough,

# this is why this directive stand for.

#

CONTEXT_AS_TRGM 0

# By default Ora2Pg creates a function-based index to translate Oracle Text

# indexes.

# CREATE INDEX ON t_document

# USING gin(to_tsvector('french', title));

# You will have to rewrite the CONTAIN() clause using to_tsvector(), example:

# SELECT id,title FROM t_document

# WHERE to_tsvector(title)) @@ to_tsquery('search_word');

#

# To force Ora2Pg to create an extra tsvector column with a dedicated triggers

# for FTS indexes, disable this directive. In this case, Ora2Pg will add the

# column as follow: ALTER TABLE t_document ADD COLUMN tsv_title tsvector;

# Then update the column to compute FTS vectors if data have been loaded before

# UPDATE t_document SET tsv_title =

# to_tsvector('french', coalesce(title,''));

# To automatically update the column when a modification in the title column

# appears, Ora2Pg adds the following trigger:

#

# CREATE FUNCTION tsv_t_document_title() RETURNS trigger AS $$

# BEGIN

# IF TG_OP = 'INSERT' OR new.title != old.title THEN

# new.tsv_title :=

# to_tsvector('french', coalesce(new.title,''));

# END IF;

# return new;

# END

# $$ LANGUAGE plpgsql;

# CREATE TRIGGER trig_tsv_t_document_title BEFORE INSERT OR UPDATE

# ON t_document

# FOR EACH ROW EXECUTE PROCEDURE tsv_t_document_title();

#

# When the Oracle text index is defined over multiple column, Ora2Pg will use

# setweight() to set a weight in the order of the column declaration.

#

FTS_INDEX_ONLY 1

# Use this directive to force text search configuration to use. When it is not

# set, Ora2Pg will autodetect the stemmer used by Oracle for each index and

# pg_catalog.english if nothing is found.

#

#FTS_CONFIG pg_catalog.french

# If you want to perform your text search in an accent insensitive way, enable

# this directive. Ora2Pg will create an helper function over unaccent() and

# creates the pg_trgm indexes using this function. With FTS Ora2Pg will

# redefine your text search configuration, for example:

#

# CREATE TEXT SEARCH CONFIGURATION fr (COPY = pg_catalog.french);

# ALTER TEXT SEARCH CONFIGURATION fr

# ALTER MAPPING FOR hword, hword_part, word WITH unaccent, french_stem;

#

# When enabled, Ora2pg will create the wrapper function:

#

# CREATE OR REPLACE FUNCTION unaccent_immutable(text)

# RETURNS text AS

# $$

# SELECT public.unaccent('public.unaccent', )

# $$ LANGUAGE sql IMMUTABLE

# COST 1;

#

# indexes are exported as follow:

#

# CREATE INDEX t_document_title_unaccent_trgm_idx ON t_document

# USING gin (unaccent_immutable(title) gin_trgm_ops);

#

# In your queries you will need to use the same function in the search to

# be able to use the function-based index. Example:

#

# SELECT * FROM t_document

# WHERE unaccent_immutable(title) LIKE '%donnees%';

#

USE_UNACCENT 0

# Same as above but call lower() in the unaccent_immutable() function:

#

# CREATE OR REPLACE FUNCTION unaccent_immutable(text)

# RETURNS text AS

# $$

# SELECT lower(public.unaccent('public.unaccent', ));

# $$ LANGUAGE sql IMMUTABLE;

#

USE_LOWER_UNACCENT 0

#------------------------------------------------------------------------------

# DATA DIFF SECTION (only delete and insert actually changed rows)

#------------------------------------------------------------------------------

# EXPERIMENTAL! Not yet working correctly with partitioned tables, parallelism,

# and direct Postgres connection! Test before using in production!

# This feature affects SQL output for data (INSERT or COPY).

# The deletion and (re-)importing of data is redirected to temporary tables

# (with configurable suffix) and matching entries (i.e. quasi-unchanged rows)

# eliminated before actual application of the DELETE, UPDATE and INSERT.

# Optional functions can be specified that are called before or after the

# actual DELETE, UPDATE and INSERT per table, or after all tables have been

# processed.

#

# Enable DATADIFF functionality

DATADIFF 0

# Use UPDATE where changed columns can be matched by the primary key

# (otherwise rows are DELETEd and re-INSERTed, which may interfere with

# inverse foreign keys relationships!)

DATADIFF_UPDATE_BY_PKEY 0

# Suffix for temporary tables holding rows to be deleted and to be inserted.

# Pay attention to your tables names:

# 1) There better be no two tables with names such that name1 + suffix = name2

# 2) length(suffix) + length(tablename) < NAMEDATALEN (usually 64)

DATADIFF_DEL_SUFFIX _del

DATADIFF_UPD_SUFFIX _upd

DATADIFF_INS_SUFFIX _ins

# Allow setting the work_mem and temp_buffers parameters

# to keep temp tables in memory and have efficient sorting, etc.

DATADIFF_WORK_MEM 256 MB

DATADIFF_TEMP_BUFFERS 512 MB

# The following are names of functions that will be called (via SELECT)

# after the temporary tables have been reduced (by removing matching rows)

# and right before or right after the actual DELETE and INSERT are performed.

# They must take four arguments, which should ideally be of type "regclass",

# representing the real table, the "deletions", the "updates", and the

# "insertions" temp table names, respectively. They are called before

# re-activation of triggers, indexes, etc. (if configured).

#DATADIFF_BEFORE my_datadiff_handler_function

#DATADIFF_AFTER my_datadiff_handler_function

# Another function can be called (via SELECT) right before the entire COMMIT

# (i.e., after re-activation of indexes, triggers, etc.), which will be

# passed in Postgres ARRAYs of the table names of the real tables, the

# "deletions", the "updates" and the "insertions" temp tables, respectively,

# with same array index positions belonging together. So this function should

# take four arguments of type regclass[]

#DATADIFF_AFTER_ALL my_datadiff_bunch_handler_function

# If in doubt, use schema-qualified function names here.

# The search_path will have been set to PG_SCHEMA if EXPORT_SCHEMA == 1

# (as defined by you in those config parameters, see above),

# i.e., the "public" schema is not contained if EXPORT_SCHEMA == 1

#------------------------------------------------------------------------------

# CONSTRAINT SECTION (Control constraints export and import behaviors)

#------------------------------------------------------------------------------

# Support for turning off certain schema features in the postgres side

# during schema export. Values can be : fkeys, pkeys, ukeys, indexes, checks

# separated by a space character.

# fkeys : turn off foreign key constraints

# pkeys : turn off primary keys

# ukeys : turn off unique column constraints

# indexes : turn off all other index types

# checks : turn off check constraints

#SKIP fkeys pkeys ukeys indexes checks

# By default names of the primary and unique key in the source Oracle database

# are ignored and key names are autogenerated in the target PostgreSQL database

# with the PostgreSQL internal default naming rules. If you want to preserve

# Oracle primary and unique key names set this option to 1.

# Please note if value of USE_TABLESPACE is set to 1 the value of this option is

# enforced to 1 to preserve correct primary and unique key allocation to tablespace.

KEEP_PKEY_NAMES 0

# Enable this directive if you want to add primary key definitions inside the

# create table statements. If disabled (the default) primary key definition

# will be added with an alter table statement. Enable it if you are exporting

# to GreenPlum PostgreSQL database.

PKEY_IN_CREATE 0

# This directive allow you to add an ON UPDATE CASCADE option to a foreign

# key when a ON DELETE CASCADE is defined or always. Oracle do not support

# this feature, you have to use trigger to operate the ON UPDATE CASCADE.

# As PostgreSQL has this feature, you can choose how to add the foreign

# key option. There is three value to this directive: never, the default

# that mean that foreign keys will be declared exactly like in Oracle.

# The second value is delete, that mean that the ON UPDATE CASCADE option

# will be added only if the ON DELETE CASCADE is already defined on the

# foreign Keys. The last value, always, will force all foreign keys to be

# defined using the update option.

FKEY_ADD_UPDATE never

# When exporting tables, Ora2Pg normally exports constraints as they are;

# if they are non-deferrable they are exported as non-deferrable.

# However, non-deferrable constraints will probably cause problems when

# attempting to import data to PostgreSQL. The following option set to 1

# will cause all foreign key constraints to be exported as deferrable

FKEY_DEFERRABLE 0

# In addition when exporting data the DEFER_FKEY option set to 1 will add

# a command to defer all foreign key constraints during data export and

# the import will be done in a single transaction. This will work only if

# foreign keys have been exported as deferrable and you are not using direct

# import to PostgreSQL (PG_DSN is not defined). Constraints will then be

# checked at the end of the transaction. This directive can also be enabled

# if you want to force all foreign keys to be created as deferrable and

# initially deferred during schema export (TABLE export type).

DEFER_FKEY 0

# If deferring foreign keys is not possible due to the amount of data in a

# single transaction, you have not exported foreign keys as deferrable or you

# are using direct import to PostgreSQL, you can use the DROP_FKEY directive.

# It will drop all foreign keys before all data import and recreate them at

# the end of the import.

DROP_FKEY 0

#------------------------------------------------------------------------------

# TRIGGERS AND SEQUENCES SECTION (Control triggers and sequences behaviors)

#------------------------------------------------------------------------------

# Disables alter of sequences on all tables in COPY or INSERT mode.

# Set to 1 if you want to disable update of sequence during data migration.

DISABLE_SEQUENCE 1

# Disables triggers on all tables in COPY or INSERT mode. Available modes

# are USER (user defined triggers) and ALL (includes RI system

# triggers). Default is 0 do not add SQL statement to disable trigger.

# If you want to disable triggers during data migration, set the value to

# USER if your are connected as non superuser and ALL if you are connected

# as PostgreSQL superuser. A value of 1 is equal to USER.

DISABLE_TRIGGERS 1

#------------------------------------------------------------------------------

# OBJECT MODIFICATION SECTION (Control objects structure or name modifications)

#------------------------------------------------------------------------------

# You may wish to just extract data from some fields, the following directives

# will help you to do that. Works only with export type INSERT or COPY

# Modify output from the following tables(fields separate by space or comma)

#MODIFY_STRUCT TABLE_TEST(dico,dossier)

# You may wish to change table names during data extraction, especally for

# replication use. Give a list of tables separate by space as follow.

#REPLACE_TABLES ORIG_TB_NAME1:NEW_TB_NAME1 ORIG_TB_NAME2:NEW_TB_NAME2

# You may wish to change column names during export. Give a list of tables

# and columns separate by comma as follow.

#REPLACE_COLS TB_NAME(ORIG_COLNAME1:NEW_COLNAME1,ORIG_COLNAME2:NEW_COLNAME2)

# By default all object names are converted to lower case, if you

# want to preserve Oracle object name as-is set this to 1. Not recommended

# unless you always quote all tables and columns on all your scripts.

PRESERVE_CASE 0

# Add the given value as suffix to index names. Useful if you have indexes

# with same name as tables. Not so common but it can help.

#INDEXES_SUFFIX _idx

# Enable this directive to rename all indexes using tablename_columns_names.

# Could be very useful for database that have multiple time the same index name

# or that use the same name than a table, which is not allowed by PostgreSQL

# Disabled by default.

INDEXES_RENAMING 0

# Operator classes text_pattern_ops, varchar_pattern_ops, and bpchar_pattern_ops

# support B-tree indexes on the corresponding types. The difference from the

# default operator classes is that the values are compared strictly character by

# character rather than according to the locale-specific collation rules. This

# makes these operator classes suitable for use by queries involving pattern

# matching expressions (LIKE or POSIX regular expressions) when the database

# does not use the standard "C" locale. If you enable, with value 1, this will

# force Ora2Pg to export all indexes defined on varchar2() and char() columns

# using those operators. If you set it to a value greater than 1 it will only

# change indexes on columns where the charactere limit is greater or equal than

# this value. For example, set it to 128 to create these kind of indexes on

# columns of type varchar2(N) where N >= 128.

USE_INDEX_OPCLASS 0

# Enable this directive if you want that your partition table name will be

# exported using the parent table name. Disabled by default. If you have

# multiple partitioned table, when exported to PostgreSQL some partitions

# could have the same name but different parent tables. This is not allowed,

# table name must be unique.

PREFIX_PARTITION 0

# Disable this directive if your subpartitions are dedicated to your partition

# (in case of your partition_name is a part of your subpartition_name)

PREFIX_SUB_PARTITION 1

# If you do not want to reproduce the partitioning like in Oracle and want to

# export all partitionned Oracle data into the main single table in PostgreSQL

# enable this directive. Ora2Pg will export all data into the main table name.

# Default is to use partitionning, Ora2Pg will export data from each partition

# and import them into the PostgreSQL dedicated partition table.

DISABLE_PARTITION 0

# Activating this directive will force Ora2Pg to add WITH (OIDS) when creating

# tables or views as tables. Default is same as PostgreSQL, disabled.

WITH_OID 0

# Allow escaping of column name using Oracle reserved words.

ORA_RESERVED_WORDS audit,comment,references

# Enable this directive if you have tables or column names that are a reserved

# word for PostgreSQL. Ora2Pg will double quote the name of the object.

USE_RESERVED_WORDS 0

# By default Ora2Pg export Oracle tables with the NOLOGGING attribute as

# UNLOGGED tables. You may want to fully disable this feature because

# you will lost all data from unlogged table in case of PostgreSQL crash.

# Set it to 1 to export all tables as normal table.

DISABLE_UNLOGGED 1

#------------------------------------------------------------------------------

# OUTPUT SECTION (Control output to file or PostgreSQL database)

#------------------------------------------------------------------------------

# Define the following directive to send export directly to a PostgreSQL

# database, this will disable file output. Note that these directives are only

# used for data export, other export need to be imported manually through the

# use of psql or any other PostgreSQL client.

#PG_DSN dbi:Pg:dbname=test_db;host=localhost;port=5432

#PG_USER test

#PG_PWD test

# By default all output is dump to STDOUT if not send directly to postgresql

# database (see above). Give a filename to save export to it. If you want

# a Gzip-ed compressed file just add the extension .gz to the filename (you

# need perl module Compress::Zlib from CPAN). Add extension .bz2 to use Bzip2

# compression.

OUTPUT output.sql

# Base directory where all dumped files must be written

#OUTPUT_DIR /var/tmp

# Path to the bzip2 program. See OUTPUT directive above.

BZIP2

# Allow object constraints to be saved in a separate file during schema export.

# The file will be named CONSTRAINTS_OUTPUT. Where OUTPUT is the value of the

# corresponding configuration directive. You can use .gz xor .bz2 extension to

# enable compression. Default is to save all data in the OUTPUT file. This

# directive is usable only with TABLE export type.

FILE_PER_CONSTRAINT 1

# Allow indexes to be saved in a separate file during schema export. The file

# will be named INDEXES_OUTPUT. Where OUTPUT is the value of the corresponding

# configuration directive. You can use the .gz, .xor, or .bz2 file extension to

# enable compression. Default is to save all data in the OUTPUT file. This

# directive is usable only with TABLE or TABLESPACE export type. With the

# TABLESPACE export, it is used to write "ALTER INDEX ... TABLESPACE ..." into

# a separate file named TBSP_INDEXES_OUTPUT that can be loaded at end of the

# migration after the indexes creation to move the indexes.

FILE_PER_INDEX 1

# Allow foreign key declaration to be saved in a separate file during

# schema export. By default foreign keys are exported into the main

# output file or in the CONSTRAINT_output.sql file. When enabled foreign

# keys will be exported into a file named FKEYS_output.sql

FILE_PER_FKEYS 1

# Allow data export to be saved in one file per table/view. The files

# will be named as tablename_OUTPUT. Where OUTPUT is the value of the

# corresponding configuration directive. You can use .gz xor .bz2

# extension to enable compression. Default is to save all data in one

# file. This is usable only during INSERT or COPY export type.

FILE_PER_TABLE 1

# Allow function export to be saved in one file per function/procedure.

# The files will be named as funcname_OUTPUT. Where OUTPUT is the value

# of the corresponding configuration directive. You can use .gz xor .bz2

# extension to enable compression. Default is to save all data in one

# file. It is usable during FUNCTION, PROCEDURE, TRIGGER and PACKAGE

# export type.

FILE_PER_FUNCTION 1

# By default Ora2Pg will force Perl to use utf8 I/O encoding. This is done through

# a call to the Perl pragma:

#

# use open ':utf8';

#

# You can override this encoding by using the BINMODE directive, for example you

# can set it to :locale to use your locale or iso-8859-7, it will respectively use

#

# use open ':locale';

# use open ':encoding(iso-8859-7)';

#

# If you have change the NLS_LANG in non UTF8 encoding, you might want to set this

# directive. See http://perldoc.perl.org/5.14.2/open.html for more information.

# Most of the time, you might leave this directive commented.

#BINMODE utf8

# Set it to 0 to not include the call to \set ON_ERROR_STOP ON in all SQL

# scripts. By default this order is always present.

STOP_ON_ERROR 1

# Enable this directive to use COPY FREEZE instead of a simple COPY to

# export data with rows already frozen. This is intended as a performance

# option for initial data loading. Rows will be frozen only if the table

# being loaded has been created or truncated in the current subtransaction.

# This will only works with export to file and when -J or ORACLE_COPIES is

# not set or default to 1. It can be used with direct import into PostgreSQL

# under the same condition but -j or JOBS must also be unset or default to 1.

COPY_FREEZE 0

# By default Ora2Pg use CREATE OR REPLACE in functions and views DDL, if you

# need not to override existing functions or views disable this configuration